Workshop Report: Evaluation Developers x EdTech Strategy Lab

On June 11, the EdTech Strategy Lab hosted the second session of its Summer Workshop Series. This ongoing series is designed to foster interactive discussions, with this workshop specifically focused on deepening our understanding of the needs and requirements of framework developers.

Strategy Lab Insights

The session began with a series of insights shared by Beth Havinga, the Managing Director of the EEA. She shed light on the findings of the EdTech Strategy Lab research conducted so far. The research revealed that there has been a surge in the development and publication of frameworks and evaluation systems. Nearly 140 such systems have been published globally, and in the last 12 to 18 months, an additional 40 have entered the pipeline. These frameworks vary in focus and are driven by different forces. Some concentrate on outputs, others on solutions, safety, accessibility, and even technical requirements. This diversity reflects the complexity and multifaceted nature of the EdTech ecosystem.

Broadly speaking, these frameworks can be classified into three main types: research or academic based, commercially focused, and developed by the public sector. Each type is driven by distinct objectives. The research or academic model aims to define efficacy levels, impact, and evidence, and design certifications. Commercial frameworks, on the other hand, focus on legitimising EdTech products and often require payment for certification. Frameworks emerging from the public sector predominantly aim to define mandatory standards and have a technical focus.

The development of these frameworks is not without challenges. One of the key issues is the limited communication between the different stakeholder groups developing these mechanisms. This lack of communication can lead to duplication of efforts and inconsistencies between frameworks.

In addition to the diversity of frameworks and the challenges of communication and alignment, Beth highlighted several other well-documented challenges. These include the time requirement to complete evaluation processes, which can range from 3 to 18 months. The acceptability and recognition of certification also differs by institution or country, leading to potential issues with interoperability between systems. Furthermore, there is a relatively low uptake and minimal incentives for these frameworks, in addition to a lack of sufficient support for their implementation.

The session went on to provide valuable insights into the current state of EdTech evaluation frameworks. It highlighted the challenges faced by the sector and underscored the need for greater collaboration and alignment among stakeholders. Workshop participants also heard from two organisations with working evaluation models.

UNICEF’s Global Learning Innovation Hub and the EdTech for Good Framework

Milja Laakso and Jussi Savolainen from UNICEF’s Global Learning Innovation Hub shared valuable insights about the EdTech for Good Framework and the Learning Cabinet. The Global Learning Innovation Hub, based in Helsinki, focuses on finding innovative solutions to address the learning crisis in low and middle-income countries, where the percentage of children unable to read and comprehend basic text has been on the rise.

The Hub aims to bring together traditional and non-traditional actors in the education space to imagine the future of learning and tackle the challenges faced by the global education landscape. To achieve this, UNICEF has partnered with various organisations and developed initiatives such as the EdTech for Good Framework and the Learning Cabinet.

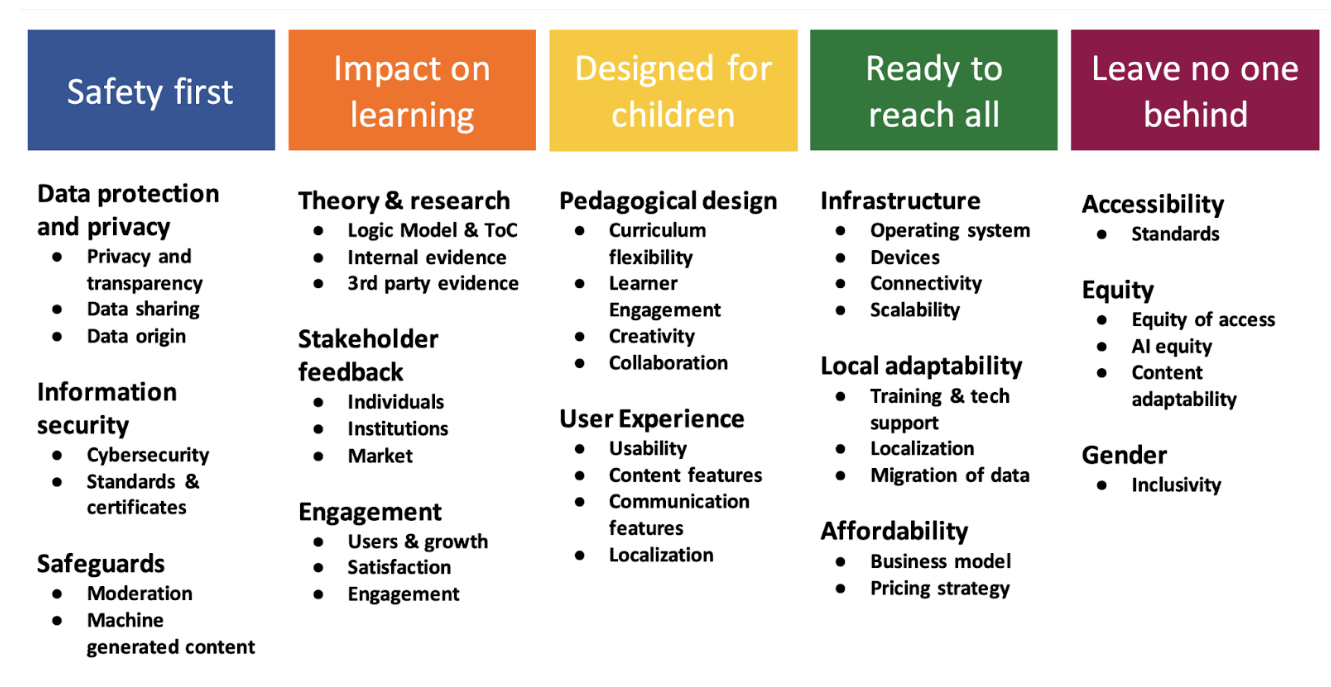

The EdTech for Good Framework, a public good, is designed to curate and assess EdTech tools, and features 132 questions across 5 categories to evaluate learning and teaching tools for early childhood development and the K-12 context. Centred around five key pillars (see below), the framework places significant emphasis on safety, impact on learning, scalability, inclusion, equity, and accessibility.

Additionally, the team at UNICEF is in the process of developing the Learning Cabinet, an online discovery platform, created as an output of the EdTech for Good Framework. This platform aims to provide a curated collection of EdTech tools that have been screened, targeting decision makers in larger scale organisations or governments, particularly in diverse geographical contexts where UNICEF operates.

During the discussion, the UNICEF team underscored some of the key challenges related to the development of evaluation mechanisms, including the time-consuming process of screening tools, ensuring the platform remains up-to-date, and balancing the development of additional evaluation frameworks.

Fireside Chat with Michael Forshaw from EdTech Impact

Providing a second example of evaluation in practice, Michael Forshaw, CEO of Edtech Impact, also shared his perspectives during the workshop. EdTech Impact was established in the United Kingdom about six years ago with the goal of addressing the challenges surrounding the transparency and discovery of EdTech solutions.

In the wake of the COVID-19 pandemic, Edtech Impact has emerged as a trusted resource, offering education technology reviews, ratings, and certifications. Their core mission is to assist schools in discovering new EdTech solutions while enabling them to make detailed comparisons using a wealth of user-generated data and reviews. With approximately 500,000 active users, over 26,000 reviews and ratings, and about 5,000 EdTech products listed across 100 different categories, the platform provides a comprehensive ecosystem for educators.

Central to the platform is a set of metrics aimed at evaluating EdTech quality through a holistic framework, with a clear focus on user experience and outcomes. Michael emphasises key questions that drive the platform, including the desired outcomes of a tool or resource, its claims, and how users engage with the EdTech solution.

EdTech Impact's approach involves collaborating with industry to promote transparency and accountability within the EdTech ecosystem. Their goal is to motivate and incentivize companies to collect feedback from customers through a specific lens of impact metrics. This not only benefits those evaluating and implementing EdTech solutions but also offers companies opportunities to enhance their brand, visibility, and generate leads. The feedback received from the platform can be leveraged to improve products and elevate the overall EdTech ecosystem.

Additionally, EdTech Impact evaluates lawfulness, safety, and pedagogical quality, and has recently launched EdTech Impact Manager. This product allows schools to leverage EdTech Impact’s rich dataset, taxonomies and feedback tools to monitor the effectiveness of their existing technology investments, empowering them to generate relevant data specific to their context, and make informed decisions around value for money.

Discussion Points

During the workshop, participants discussed the primary drivers for the development of evaluation frameworks, highlighting factors such as decision-making, safety and security, impact and pedagogical evaluation, advocacy, and accountability within the EdTech industry. They also emphasised the need for efficacy data to drive decision-making processes, evaluate safety, particularly with AI solutions, and ensure that a focus remains on pedagogy, not just technology.

Participants also suggested that the process of designing the ideal evaluation framework requires continuously questioning assumptions and ensuring inclusivity within these discussions. For many, building on existing knowledge and frameworks and considering issues of long-term sustainability of the mechanisms put into place were important considerations. In particular, several members of the group highlighted the need for easy operationalization, particularly for schools with limited time, resources, and technical knowledge.

Asked how it might be possible to increase the transparency of framework processes and their outputs, the workshop participants also put forward a number of excellent suggestions, including:

Firstly, there was a suggestion to publish all standards in an effort to ensure transparency and accessibility within the broader education ecosystem.

It was also pointed out that it is important to align on what exactly is considered strong enough evidence. A common understanding here would be a step toward establishing consistent evaluation criteria.

Standardising the outcomes of evaluations or tests, as well as framework processes, would promote comparability across schools.

Similarly, in this vein, the context of each school should also be stated to allow for transferability, taking into account factors such as student numbers, demographics, and specific learner needs. Including the vision, digital strategy, and transformation journey of schools and providing evaluation results to EdTech providers for feedback were also flagged as important considerations for improving the overall quality of educational products.